COMPANION

to

CORRELATION

is

UNDER CONSTRUCTION

by

Lubomyr Prytulak

©2006

First posted online 04-Apr-2003

Last updated 15-Feb-2006 23:54

Hit F11 on your keyboard to expand readable area.

|

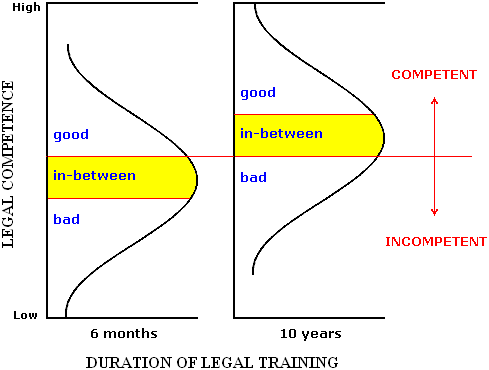

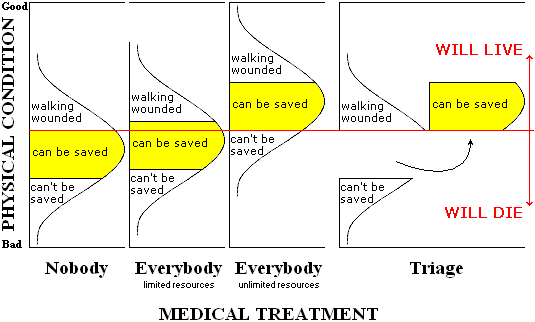

Scientific research is divided into two great categories � correlational and experimental. Within the correlational category, the two most fundamental concepts are the correlation coefficient and the regression equation. These two concepts are the subjects of this book.

Since their introduction toward the end of the last century, the correlation coefficient, and to a lesser extent its companion, the regression equation, have permeated every branch of learning. After the mean and standard deviation, they are perhaps the most widely used of all statistical tools. Tens of thousands of them are published annually. Middle-priced hand calculators display them, no computation required on the part of the user, for any data one might care to punch in. They have escaped from the hands of experts, and passed into the hands of the people.

For tools of such central importance to scientific method and that find such widespread use, however, they receive surprisingly cursory treatment in research education. Following a demonstration of their basic equations and computations, statistics texts most often present a paragraph or two of cautions: see that the relationship is linear, don't generalize to untested values of the independent variable, don't jump to cause-effect conclusions, and little else. The result of such brevity of guidance is that as an interpreter of data � whether it be someone else's or his own � the student is left a babe in the woods. His evaluation of research is shallow and he remains blind to the grossest fallacies. It is in the midst of that darkness that the present book attempts to light a candle.

The premise on which this book is based is that to acquire minimal competence in the handling or the understanding of correlational data, the student must master not a few paragraphs of elaboration, but a whole book. This elaboration, furthermore, is necessary to a low-level understanding of no more than the correlation coefficient and the regression equation. For the more advanced topics that might be covered in an introductory statistics course � such as testing the correlation coefficient for statistical significance, computing confidence intervals for the slope of the regression equation, multiple correlation, or non-parametric measures of association � other elaborative texts will need to be written.

The present book is inspired by Campbell and Stanley's (1966) little masterpiece, Experimental and Quasi-Experimental Designs for Research. As their title suggests, Campbell and Stanley deal mainly with that other category of research � experimental. Nevertheless, they devote some nine pages to correlation, and those nine pages contain the clearest and most helpful introduction to the topic that I know of.

Campbell and Stanley's approach is unique in three ways. They focus attention on the very points that most often lead researchers astray. They demonstrate their principles non-mathematically, in graphs and tables rather than in equations. They illustrate their principles over a range of examples � hypothetical ones that offer the advantage of simplicity and clarity, and real ones that demonstrate relevance and applicability. The present book follows their example � it attempts to be practical, non-mathematical, and concrete. It differs from Campbell and Stanley in that it proceeds more slowly, in smaller steps, offers greater detail, and covers a much broader range of topics.

The chief use of this book, I envision, will be to complement and enrich a mathematical introduction to correlation and regression, of the sort that is offered in introductory statistics courses. The absence � or at least the scarcity � of formulas and equations within the body of the book carries the advantage of avoiding any clash of notations or approaches with those the student is learning in class. As data collection in science is typically numerical, it is impossible in attempting to understand such data to avoid some light mathematics, but this will never exceed an elementary-school level.

Upon thumbing through the book, the illustrations may seem daunting, and may suggest that a high level of mathematical competence will be required to understand them, but such is far from the case. Approached gradually, their meaning will be laid bare with less trouble than was imagined.

For the reader who happens not to be simultaneously enrolled in an introductory statistics course, however, the present book can be read alone. Comprehension does not depend upon being able to actually compute a correlation coefficient or a regression equation from raw data. In case such an independent reader does wish to be able to perform such computations, however, an appendix has been provided showing how he can do so. Buying a calculator that does the work is an alternative � for someone not going on to a mathematical study of the subject, little is to be gained from learning to work with the formulas when an easier way presents itself. However it is accomplished, though, being able to verify the various coefficients reported on the pages below may inspire the reader with confidence, and being able to calculate them for data of his own may encourage him to join the ranks of those who contribute to scientific knowledge.

The study of scientific method serves to reveal not only which instances of research conform to scientific method and which don't, but serves to reveal also which everyday utterances conform to scientific method and which don't. Scientific method is not merely a tool applicable to the laboratory, it is a tool applicable to all of life. Thus, one thing that can with confidence be expected from learning scientific method is that it will transform one's perception of everything that is said, and that the principles laid out in the present book will be discovered to have practical application many times each day. Many skills that one may acquire have some chance of eventually lying unused � we may study physics or chemistry or piano or photography or French or Computer Science � and may find that life takes us in directions in which our learning is rarely applied, but such an eventuality is impossible in the case of scientific method, as scientific method is the most powerful tool that mankind has discovered, and the most universally applicable. To fail to apply scientific method every day of one's life is to stop talking, stop reading, stop thinking, stop planning, stop trying to change the world.

In learning to apply scientific method either in the laboratory or out, one of the most useful skills is that of translating every problem into a table or a graph. Plentiful practice is provided for this skill, and the reader will benefit not only from following carefully what is said about each table or graph, but even more by creating new tables and graphs of his own. Some of what is written below is valuable primarily in teaching the skill of translating commonplace situations into tables and graphs, and if that were the only skill that were taught, it would be a valuable one.

Chapter 1

Basic Correlation and Regression |

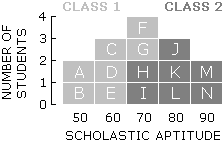

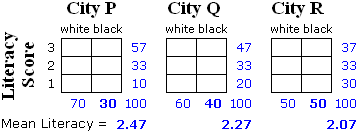

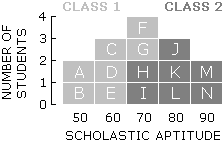

Some Useful Idealized Data

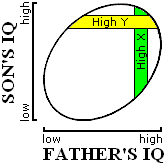

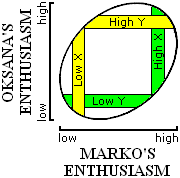

An IQ test, let us imagine, is administered to 27 fathers. This IQ test is one on which it is possible to score from 0 to 20. The scores of the fathers come out as shown in blue in Display 1-1. That is, three fathers got 10, six got 11, and so on. The mean of all the father scores (we can compute, or simply see by inspection) was 12.

As it happens, each of these fathers had a single son, and the same IQ test was administered to each of the sons. As can be seen in red below, the son scores happened to distribute themselves in exactly the same way as the father scores � that is, three sons go 10, six got 11, and so on.

Display 1-1. The left frequency distribution shows imaginary IQ scores for 27 fathers, and on the right, for their 27 sons.

|

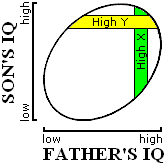

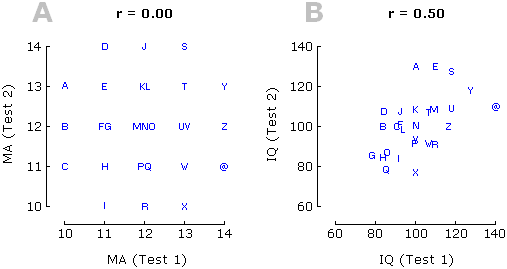

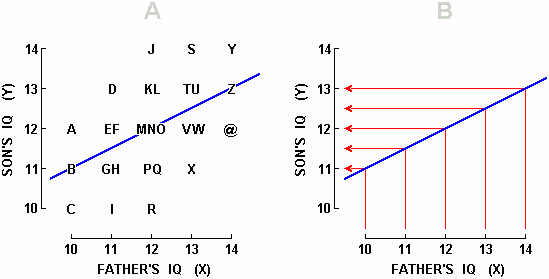

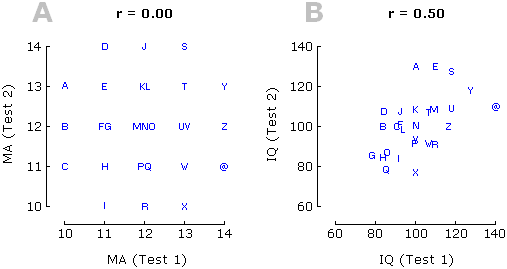

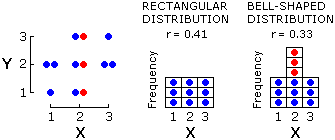

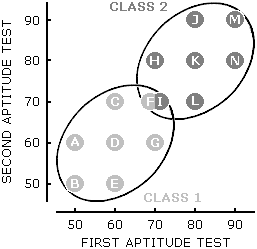

Now although it may seem that we have been told everything there is to know about the data collected, there is one piece of information still missing � the correlation between the IQs of the fathers and the IQs of the sons. The scatterplot in Display 1-2A shows one of the many possible correlations that is compatible with what we have been told so far. Display 1-2A, also, happens to resemble what we would be likely to find if we did carry out the imaginary study that we are discussing � we would find that father's and son's IQs were correlated, but not perfectly.

Which variable goes on the X axis?

In Display 1-2, we place father's IQ along the X axis and son's IQ along the Y axis � but why not the other way around?

Our convention will be to place on X whichever of the two variables is available for measurement earlier, and the other on Y. Thus, father's IQ goes on the X axis because normally we would be able to measure father's IQ years before the son is born.

Should the temporal rule not apply, we would place whichever variable seemed more likely to be the cause on X, and whichever seemed more likely to be the effect on Y. For example, if we found a correlation between coffee drinking and heart disease, we would place coffee drinking on X because we guessed that coffee drinking more likely caused heart disease than that heart disease caused coffee drinking.

If both of these rules failed, we would place whichever of the two variables was more stable on X, and the other on Y. In a correlation between height and weight, for example, we would place height on X because a person's height is more stable than his weight.

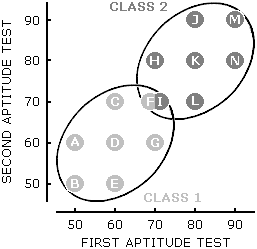

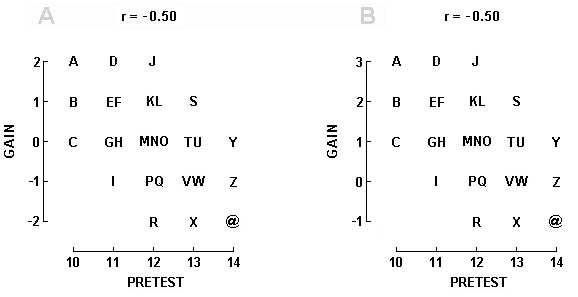

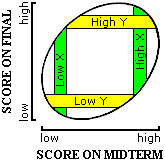

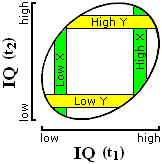

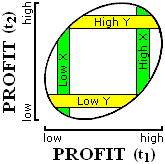

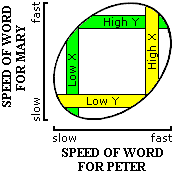

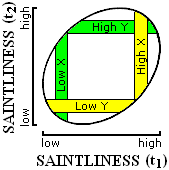

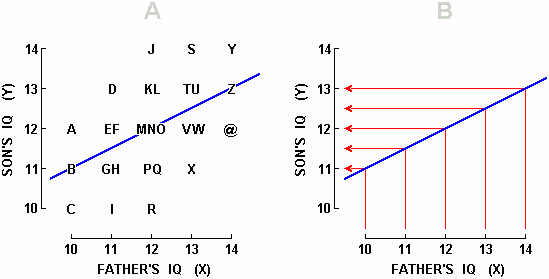

Display 1-2. A correlation of 0.50 between father IQ and son IQ � one of the many correlations that satisfy the distributional requirements for both fathers and sons laid down in Display 1-1. In both graphs above, Y is being predicted from X. In Graph B, individual data points have been omitted, and bent arrows showing prediction from each integer value of X have been inserted. Clumping of the arrows around the mean Y value of 12 constitutes the phenomenon known as "regression toward the mean."

|

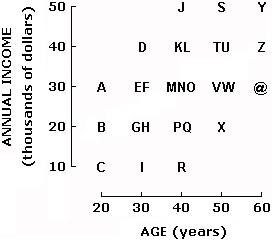

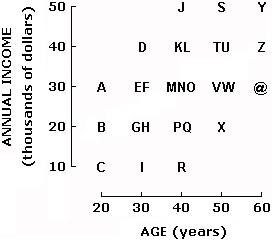

To continue, we note that Display 1-2A contains the 26 letters of the alphabet along with the @ symbol. Each of these 27 symbols provides two pieces of information about each of the 27 father-son pairs � the IQ of the father, and the IQ of his son. Take point A, for example. By looking below it, we see on the X axis that the father scored 10, and by looking to the left of it, we see on the Y axis that his son scored 12. Or, in the case of point U, we see that the father scored 13 and his son also scored 13.

Usually, a scatterplot such as the one in Display 1-2A contains dots rather than letters. We are using letters right now because they make it easier for us to specify which data point, or which set of data points, we are talking about.

It is important to not forget that in Display 1-2A above (as well as Displays 1-3A and 1-4 below), the distributions shown in Display 1-1 continue to be representative of both fathers and sons. That is, we can see in Display 1-2A that three fathers scored 10 (points A-C) and three sons scores 10 (points C, I, R); six fathers scored 11 (points D-I) and six sons scored 11 (points B, G, H, P, Q, X); and so on.

Prediction

What would we do if after gathering the data in Display 1-2A, an untested father walked into the laboratory and asked us to predict what his unborn son's IQ will be?

If we did not know the IQ of this 28th father, the best we could do would be to predict that his son would have the mean IQ of sons in general, which is to say all the sons that we had ever measured � and that grand mean is 12. But if we tested this 28th father and found that his IQ was 10, we would change our prediction for this son to the mean IQ of all the sons whose fathers had scored 10 in the past. How many sons can we find, all of whose fathers scored 10 in the past? We can find three such sons, represented by points A-C. What was the mean IQ of these three sons? Son A had 12, B had 11, and C had 10; the mean IQ is (12+11+10)/3 = 11. We would predict, then, that the new father whose IQ is 10 will have a son whose IQ is 11.

Let's generate another prediction. Suppose that the new father turns out to have an IQ of 13. What do we predict his son's IQ will be? First, we locate all the sons whose father's scored 13 in the past. We find six such sons (points S-X). Next, we compute the mean IQ of these six sons: son S had 14, T had 13, U had 13, and so on, so that the mean is (14+13+13+12+12+11)/6 = 12.5. This mean is our prediction. That is, if the new father has an IQ of 13, we predict that his son will have an IQ of 12.5.

Generally, to predict Y when we are not given X, we predict the mean of all the Ys; and when we are given X, we predict the mean Y of all the points having that X. When we are given an X, then, that X tells us which column of data points to look at, and the mean Y of the data points in that column is our predicted Y. A "predicted Y," furthermore, is often written in the literature as a Y with an accent circumflex above it, but due to the difficulty of writing letters with an accent circumflex, the convention adopted here will be to write Yted, where "ted" stands for "predicted" and is contrasted with "tor" which stands for "predictor". A Yted, in our idealized data, is simply the mean Y of the data points in a given column. Note too that as in each column the data points are symmetrical above and below Yted, we can quickly learn to see Yted by inspection: it lies at the midpoint between highest and lowest data points.

Next, let us indicate the locations of the Yteds by drawing a line through them � this line is called a regression line. In Display 1-2A, the regression line is the positively-sloped blue line passing through the middle of the graph. If we are interested not in the original data, but only in being able to predict Y from X, we could use Display 1-2B which presents only the regression line (blue) and altogether leaves out the data points which told us where to put that blue line. In Display 1-2B, predicting Y from X would proceed by following one of the vertical red lines up to the regression line, then following that red line left. Readers wishing to know how to compute the equation of the regression line can consult Appendix C.

Regression Toward the Mean

In Display 1-2, we get our first glimpse of a curious phenomenon � whenever we predict a son's IQ, we predict he will be closer to average than his father. Thus, we see in either graph in Display 1-2 that fathers who score 10 have sons who average 11 � the dullest fathers have sons who are not quite as dull. At the high end of the X axis, we discover that fathers who score 14 have sons who average only 13 � the brightest fathers have sons who are not quite as bright. Our predictions are always less extreme, closer to average, or we may say "regressed toward the mean," which is why this phenomenon is known as regression toward the mean.

Let us be clear what regression toward the mean signifies. In a particular instance, we saw that the predicted 12.5 was closer to the mean of all the sons' scores than the predictor 13 was to the mean of all the fathers' scores. Restating this more generally, when Y is being predicted from X, regression toward the mean conveys that predicted Yted is closer to Mean-Y than predictor Xtor is to Mean-X. The predicted score is closer to the mean of all the predicted scores, than the predictor score is to the mean of all the predictor scores.

Regression Increases With the Extremity of the Predictor

Let us agree, to begin, on when a predictor is "extreme." A predictor is more extreme the father away it is from its mean. Thus, if the new father scores 12, that predictor score of 12 is not at all extreme, because it falls right on the mean. If the new father scores either 11 or 13, then that predictor is slightly extreme because it lies one unit away from the mean. And if the new father scores either 10 or 14, then that predictor is very extreme because it lies two units away from the mean.

Maximally extreme predictor. We have already seen that when the predictor is maximally extreme (10 or 14), the prediction regresses one whole unit � fathers who scored 10 (and so were two units below the fathers' mean) had sons who averaged 11 (and so were only one unit below the sons' mean), and fathers who scored 14 (and so were two units above the fathers' mean) had sons who averaged 13 (and so were only one unit above the father's mean).

Moderately extreme predictor. Let us note now that when the predictor is only moderately extreme (11 or 13), the prediction regresses less, only half a unit � fathers who scored 11 (and so were one unit below the fathers' mean) had sons who averaged 11.5 (and so were only half a unit below the sons' mean), and fathers who scored 13 (and so were one unit above the fathers' mean) had sons who averaged 12.5 (and so were only half a unit above the sons' mean).

Not at all extreme predictor. Finally, let us note that when the predictor is not at all extreme (when it is the mean, 12), then the prediction regresses not at all � fathers who scored 12 had sons who averaged 12.

That is why it is said that regression increases with the extremity of the predictor.

But from the very same data that demonstrates that the amount of regression increases with the extremity of the predictor, we are also able to conclude that the degree of regression is constant. That is, no matter what the extremity of the predictor, the extremity of the prediction will (in the data we are considering) be half as great, which is to say will be lower by a factor equal to 0.50, which for this particular set of imaginary data happens also to be the correlation coefficient, a concept that will be discussed further below.

In the context of our example, we predict that the son of an average father will be just like his father, that the son of an above-average father will be somewhat below his father, and that the son of a far-above-average father will be considerably below his father. Or, moving toward the lower end, we predict that the son of a below-average-father will be somewhat above his father, and that the son of a far-below-average father will be considerably above his father. But doesn't that sound like the sons are going to vary less than the fathers? It certainly might seem that way, but such a conclusion would be erroneous.

Regression Does Not Imply Decreased Variance

If Display 1-2 forces us to acknowledge that bright fathers are having duller sons and that dull fathers are having brighter sons, doesn't that imply that the sons are less variable � clumping closer around the average � than the fathers?

No, it does not. We have already seen � and can verify afresh in Display 1-2A � that in each generation there are three people who score 10, six who score 11, and so on. We know, therefore, that the sons vary exactly as much as the fathers. The sons varying less is an illusion. Our predictions for the sons do vary less than the predictor scores, it is true, but the sons' actual scores vary just as much.

What confuses us, perhaps, is that when the three fathers who scored 14 (points Y, Z, and @) manage to produce only a single son who scores 14 (point Y), it seems as if the next generation's quota of 14s is not going to get filled. But, as Display 1-2A reveals, three sons do manage to score 14 (points J, S, Y). One of these sons had a father who scored 14 (point Y); the other two sons had fathers who scored 13 (point S), and 12 (point J).

Thus, a 14-son is most likely to come from a 14-father (1/3 of all 14-fathers produced a 14-son), is less likely to come from a 13-father (1/6 of all 13-fathers produce a 14-son), and is still less likely to come from a 12-father (1/9 of all 12-fathers produced a 14-son. We see that although a 12- or a 13- father is less likely than a 14-father to produce a 14-son, because the number of 12- and 13-fathers is large (there are fifteen of them) compared to the number of 14-fathers (there are only three of them), it is the 12- and 13-fathers who contribute the majority of 14-sons. Thus, Display 1-2 shows that 2/3 of the 14 sons come from fathers who scored less than 14.

The principles of regression, then, tell us: (1) that the sons most likely to become brilliant are the sons of Bertrand Russell, Albert Einstein, and the like; (2) on the average, though, these sons will be less brilliant than their fathers; (3) the next generation's quota of brilliant sons will be filled mostly by fathers who are not themselves brilliant � not because such fathers have a better chance of producing brilliant sons (their chances are poorer), but because there are so many such fathers.

The same thing happens at the lower end of the X axis: (1) sons of the dullest fathers have the highest probability of being dullest themselves; (2) on the average, though, these sons will be less dull than their fathers; (3) the next generation's quota of dullest sons will be filled mainly by fathers who are not themselves dullest � not because such fathers have a better chance of producing dull sons (their chances are poorer), but because there are so many such fathers.

Regression Works Both Ways

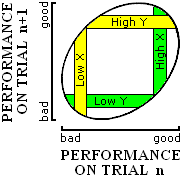

Exactly the same principles hold whether we predict Y from X (as we have been doing above), or X from Y (as we are about to do below). To the English proverb, "A wise man commonly has foolish children," we are about to add our own, "A wise child commonly has foolish parents." The proverbs would become bulkier but more accurate if they were expressed with the following qualifications: "A wise man commonly has somewhat more foolish children, but still above average," and "A wise child commonly has somewhat more foolish parents, though still above average."

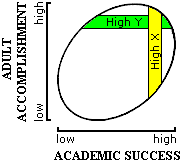

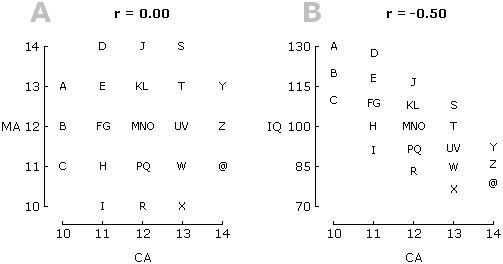

Tracing this phenomenon in the graph, we can imagine that instead of a 28th father, a 28th son walks into our laboratory and asks us to predict the IQ of his father. Before testing the son, the best we can do is to guess the mean IQ of all fathers (12). But if we test the son, we can venture a better prediction.

If the new son were to score 10 (his Y=10), then looking at Display 1-3A (which contains exactly the same data as Display 1-2A), we can restrict our attention to the mean of only those fathers whose sons scored 10 in the past. We see that there are three fathers whose sons scored 10 (C, I, R), and that as son C had a father with 10, "I" had a father with 11, and R had a father with 12, the mean father's IQ is (10+11+12)/3 = 11. Whenever sons got 10, their fathers averaged 11. If this does not have a familiar ring, you should go back a few pages and read up to the present point more carefully.

And now if, at the other extreme, the new son were to score 14 (his Y=14), we can quickly calculate that sons who previously got 14 (J, S, Y) had fathers who averaged 13.

Dull sons (whose mean is 10), in other words have fathers who on the average are not so dull (whose mean 11), and bright sons (mean 14) have fathers who on the average are not so bright (mean=13). What is going on?

Simply that regression works both ways. We find regression when we predict Y from X (Display 1-2) as well as when we predict X from Y (Display 1-3). If we put a line through the Xpredicted in each row in Display 1-3A, we get a regression line, only it is not the same one as before.

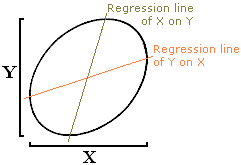

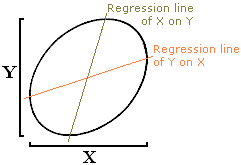

Generally, every scatterplot has two regression lines � one for predicting Y, as in Display 1-2 (known technically as the regression line of Y on X), and the other for predicting X, as in Display 1-3 (known technically as the regression line of X on Y). Display 1-3B, which contains the regression line for predicting X but not the individual data points from which the line originated, shows predictor scores on the Y axis leading to predictions on the X axis that are clumped toward the mean X of 12.

Display 1-3. The same scatterplot as in Display 1-2A, but this time with X being predicted from Y. Here, prediction starts from integer values of Y, and regression makes its appearance as the clumping around the mean X of 12 of the arrowheads which represent Xted values.

|

Having been forewarned by our discussion above, it is difficult for us now to be misled into supposing that fathers' IQs are less variable than sons' IQs � we know that predictions for fathers' IQs are less variable; actual fathers' IQs aren't; Xteds are less variable, Xs aren't. Remember that Xted stands for X-predicted.

Bi-directional regression is not merely an occasional phenomenon, or even a dependable one � it is inescapable. Whenever we have two imperfectly-correlated variables, we will find regression going from one to the other, and regression again on the way back. It is, as we shall see below, all around us. It is not surprising, then, that the phenomenon sometimes catches the attention of non-statisticians. Here, for example, is Woodrow Wilson addressing himself to a question which bears some resemblance to the one we have been discussing: What families will tomorrow's leaders emerge from?

Do you look to the leading families to go on leading you? Do you look to the ranks of men already established in authority to contribute sons to lead the next generation? They may, sometimes they do, but you can't count on them; and what you are constantly depending on is the rise out of the ranks of unknown men, the emergence of somebody from some place of which you had thought the least, to do the thing that the generation calls for. Who would have looked to see Lincoln save the nation? (cited in Padover, 1942)

That is, leading families tend to produce less-leading sons, and leading sons tend to emerge from less-leading families � that is simply regression in both directions, a phenomenon that is readily understood with the help of the scatterplots we examined above, but which without the help of graphs, seems mysterious and paradoxical.

Other Correlations

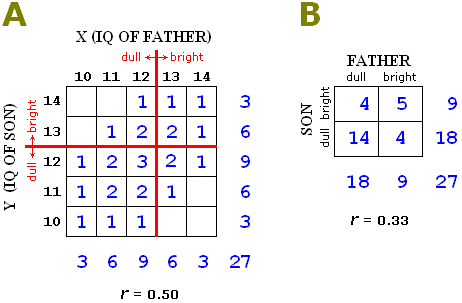

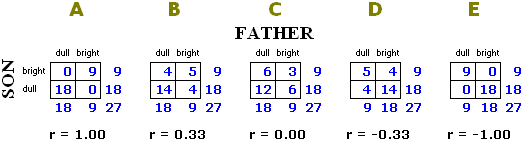

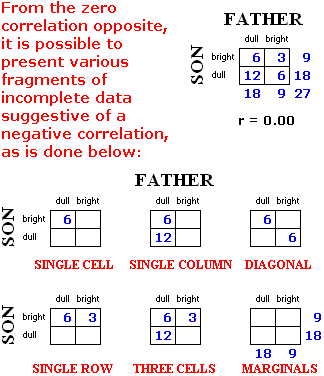

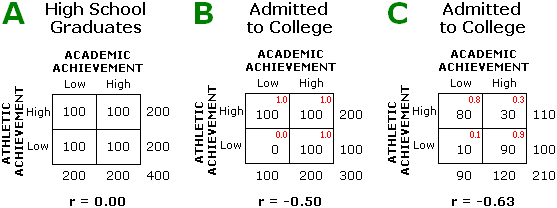

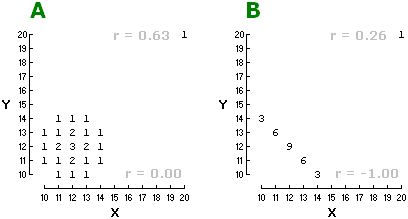

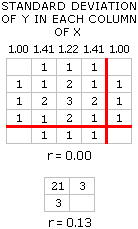

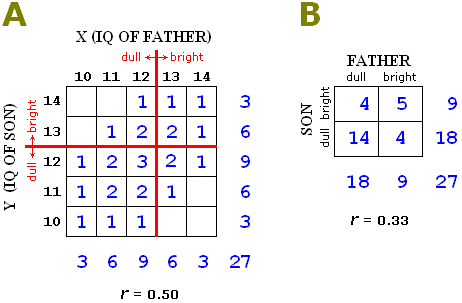

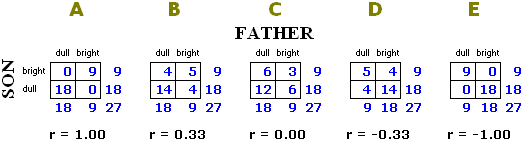

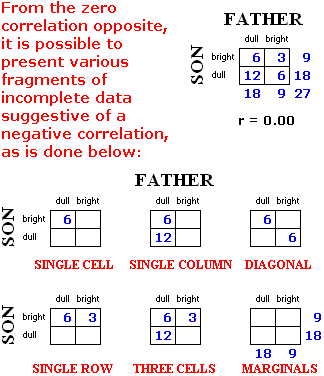

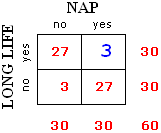

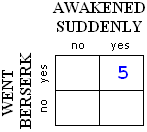

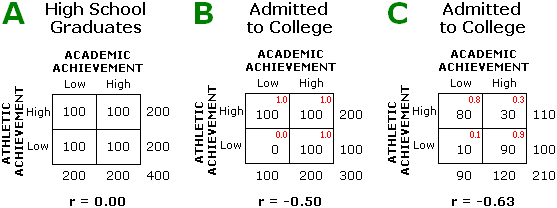

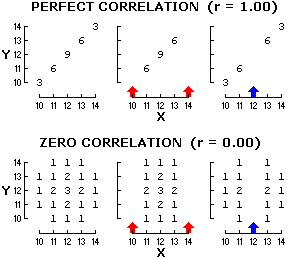

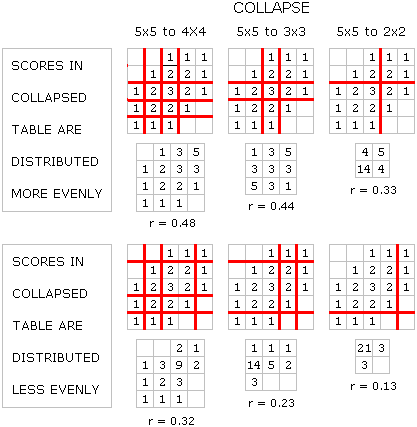

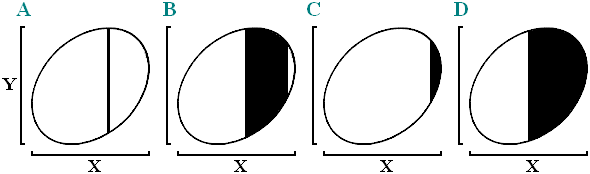

The correlation that we have been working with in Displays 1-2 and 1-3 is not the only one that is compatible with the distribution requirements for fathers as well as for sons laid down in Display 1-1. Display 1-4 repeats the correlation shown in Displays 1-2 and 1-3, but also shows four new correlations. But let us begin my locating in the monster graph below the two graphs with which we have been familiarizing ourselves above.

Our old Display 1-2 above can be found in Display 1-4B below. What is new is that instead of representing each father-son pair with a capital letter or an @, we now use a number to specify how many father-son pairs fall in each position in the graph. For example, points A, B, and C in Display 1-2A are now each represented by a 1 in Display 1-4B, points E and F are represented by a single 2, and points M, N, and O by a single 3. The blue arrows just above the X axis mark the five possible predictors � the Xtors � and the blue arrows just to the right of the Y axis mark the corresponding five possible Yteds.

In the same way, Display 1-4G summarizes Display 1-3. The difference between Displays 1-4B and 1-4G, then, lies not in the data but only in what is being predicted from what.

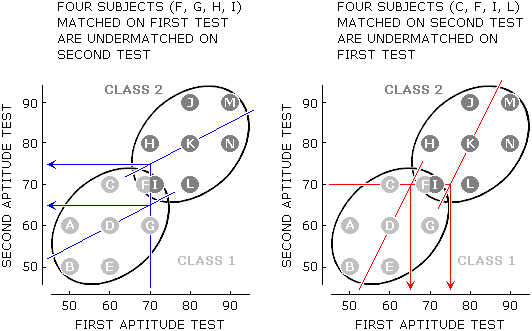

Display 1-4. Five different correlations between X and Y are shown when predicting Y (upper level) and repeated when predicting X (lower level). In every graph, both X and Y satisfy the distribution requirements laid down in Display 1-1.

|

Generally, Displays 1-4A to 1-4E on the upper level show five different correlations, and with Y always being predicted from X. Displays 1-4F to 1-4J on the lower level repeat the same five correlations, but this time with X being predicted from Y. And before we continue, one reminder: The frequency distributions shown in Display 1-1 do continue to hold for fathers as well as for sons in every single graph in Display 1-4. If you are unsure of this, stop now and convince yourself of it � in every graph, find three fathers who scored 10, then find three sons who scored 10, and so on.

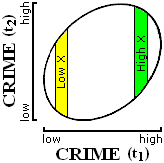

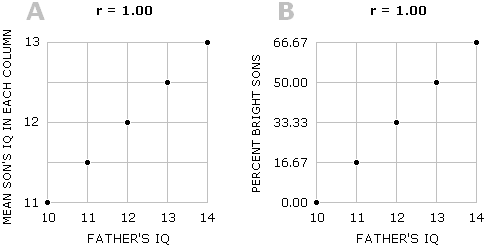

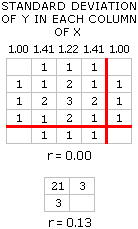

Let's start by taking a look at Display 1-4A, in which we find that the three fathers who scored 10 all happened to have sons who scored 10; the six fathers who scored 11 all happened to have sons who scored 11; and so on. In other words, Display 1-4A tells us, "Like father, like son" � every son got exactly the same score as the father. This relation is referred to as a perfect positive correlation. If the numerical value of the correlation coefficient were computed, it would equal 1.00. Expressed another way, r = 1.00. Whenever all the dots in a scatterplot fall on a straight line having a positive slope, then r = 1.00.

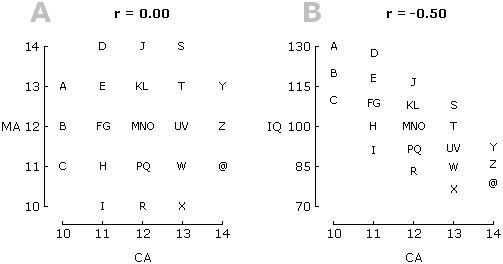

Display 1-4E, to shift to the other extreme of what is conceivable, shows a perfect negative correlation: r = -1.00. In Display 1-4E, all the dots fall on a straight line having a negative slope. In a positive correlation, high values of X are paired with high values of Y; in a negative correlation, high values of X are paired with low values of Y. Thus, in Display 1-4E, every one of the three low fathers who scored 10 each had a high son who scored 14, and every one of the three high fathers who scored 14 each had a low son who scored 10. Display 1-4E indicates that dull fathers have bright sons and bright fathers have dull sons.

Generally, r is able to range from +1.00 to -1.00. At these two extremes, all the dots fall right on a straight line having a positive or a negative slope, respectively.

When the dots cluster around a positively-sloped line but do not fall right on the line (as in Display 1-4B), however, a positive correlation is said to exist, but the correlation is not perfect and the numerical value of r will fall between 0.00 and 1.00 (in Display 1-4B, r happens to be 0.50); and as the dots cluster more tightly around the line, the numerical value of r approaches 1.00.

Similarly, when the dots cluster around a negatively-sloped line but do not fall right on the line (as in Display 1-4D), a negative correlation is said to exist, but the correlation is not perfect and the numerical value of r will fall between 0.00 and -1.00 (in Display 1-4D, r happens to be -0.50); and as the dots cluster more tightly around the line, the numerical value of r approaches -1.00.

When the data show no tendency to cluster around any sloped line (as in Display 1-4C where the dots fall in a circle), no correlation, or a zero correlation, is said to exist, and r = 0.00. Any reader who is curious to compute r for himself can consult Appendices A and B.

Regression Increases as the Correlation Weakens

Returning to perfect-positive-correlation Display 1-4A, we see that fathers who scored 10 had sons who averaged 10, fathers who scored 11 had sons who averaged 11, and so on. In other words, there is no regression toward the mean.

And in perfect-negative-correlation Display 1-4E, fathers who scored 10 (two units below the mean) had sons who averaged 14 (two units above the mean), so that the sons were just as extreme as their fathers. Or, fathers who scored 14 (two units above) had sons who averaged 10 (two units below), so that again, the sons were just as extreme as the fathers. There is no regression toward the mean in Display 1-4E either.

In perfect-correlation Displays 1-4A and 1-4E, then, there is no regression � Yted is as far from mean Y as Xtor is from mean X. We are forced to conclude that when a correlation is perfect (positive or negative), regression vanishes. Regression occurs only when the absolute value of r is less than 1.00, which can be expressed as |r| < 1.00.

Looking, finally, at Display 1-4C where r = 0.00, we note that no matter what Xtor is, Yted = 12. When Xtor = 10, Yted = 12; when Xtor = 11, Yted = 12; and so on. When r = 0.00, then, we may say that regression toward the mean is complete. No matter what the father's IQ, we always predict the son's IQ to be 12.

Because predicting the overall mean Y is exactly what we do when we have no information on X, we may say that when X is unrelated to Y, knowing X is of no use in predicting Y � whether we know X or not, we end up predicting mean Y. A zero correlation has no predictive utility whatever.

The same thing happens when X is being predicted from Y in the lower level of Display 1-4: no regression when the correlation is perfect (Displays 1-4F and 1-4J), some regression when the correlation is less than perfect (Displays 1-4G and 1-4I), and complete regression when the correlation is zero (Display 1-4H).

Summary of Regression Phenomena

In summary, we may note that in Display 1-4's upper row of graphs where we predict Y, as we move in from the outer Graphs A or E toward the inner Graph C:

the correlation weakens,

the regression line approaches the horizontal, and

the arrows which mark Yted values cluster more and more tightly around mean Y.

Similarly, in Display 1-4's lower level of graphs where we predict X, as we move in from the outer graphs F or J toward the inner Graph H:

the correlation weakens,

the regression line approaches the vertical, and

the arrows which mark Xted values cluster more tightly around mean X.

We might also make the following observations with respect to the angle between the two regression lines:

to say that the regression lines for predicting Y and for predicting X are identical is to say that the correlation is perfect (compare the regression lines in Graphs A and F, or Graphs E and J);

to say that the two regression lines are perpendicular (at right angles) is to say that the correlation is zero (compare Graphs C and H);

and to say that the two regression lines fall between identical and perpendicular is to say that the correlation falls somewhere between perfect and zero (compare Graphs B and G, or D and I).

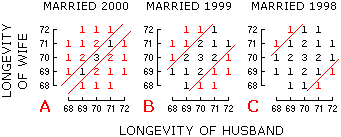

Examples of Real Correlations

Some actual correlations presented by Karl Pearson (1911, p. 21) are illustrative both of correlation magnitudes and as well of the diversity of variables that it is possible to correlate:

0.98 between the lengths of left and right femurs in man;

0.55 between a man's weight and his strength of pull;

0.21 between the size of family of mother and daughter;

0.05 between the length and breadth of Parisian skulls.

The above 0.05 correlation between length and breadth of Parisian skulls seems low when one considers that a child's skull will give two small numbers, and an adult's skull two large numbers; or a woman's skull will tend to give two numbers that are smaller than those given by a man's skull; or more generally that a person who is small for whatever reason will give two numbers that are smaller than those given by a large person � all of which should tend to produce a high correlation between length and breadth. One might conjecture, then, that the sample of skulls Pearson examined tended to be homogeneous, as perhaps all skulls of adult males, and that had he included measurements from children and women and dwarves and giants, then his correlation coefficient would have been high. One conclusion arising from questioning this strikingly low correlation � practically zero � is that it is not simply the case that two variables enjoy a single correlation, but rather that the correlation between them depends upon, among other things, the sample from which the data originate, a topic discussed in greater detail in Chapter 9 on the topic of Correlation Representativeness.

In the area of family resemblance in IQ, we may note (from Jensen, 1969, p. 49) that the father-son correlation that we have been considering above is indeed likely to be around 0.50, and is also likely to be equivalent to the father-daughter, mother-son, and mother-daughter correlations. The correlation between the IQ of a parent when a child and his own child is a bit higher, 0.56. Monozygotic twins give 0.87 when reared together, and 0.75 when reared apart. Siblings give 0.55 when reared together, and 0.47 when reared apart. Uncle (or aunt) and nephew (or niece) give 0.34. Grandparent and grandchild give 0.27. First cousins, 0.26; second cousins 0.16.

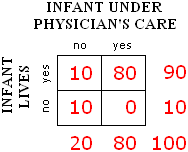

Nonsense Correlations

It is not uncommon for correlations of surprising magnitude to turn up between variables which seem to be unrelated. For example, Kerlinger and Pedhazur (1973, pp. 16-17) recorded the amount they smoked while writing different sections of their book, Multiple Regression in Behavioral Research. Later, the authors asked judges to rate the clarity of 20 passages from the book. The correlation between amount smoked while writing a passage and the clarity of that passage was a rather large 0.74. Similarly, Yule and Kendall (1950, pp. 315-316) report that from 1924 to 1937, the correlation between annual number of wireless receiving licences and number of mental defectives per 10,000 population was the almost-perfect 0.998, as well as that moving from north to south across Europe would probably produce a negative correlation between proportion of Catholics and average height.

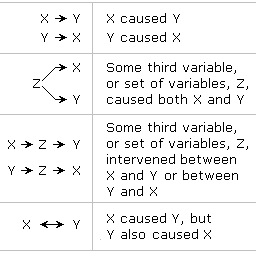

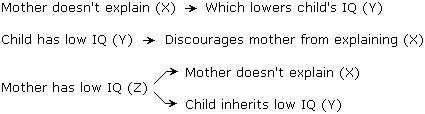

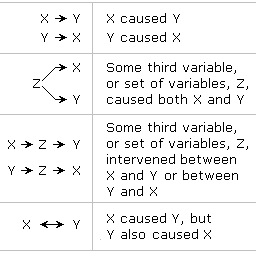

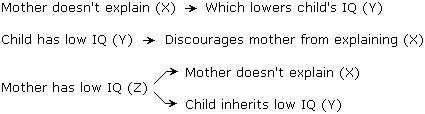

Such cases are likely to be explained in one of the following ways:

Perhaps the variables are indeed interconnected in some hitherto unanticipated manner.

The correlation is spurious, and will not be replicated at other times or in other places.

A low underlying correlation is being inflated by any of several methods, as will be discussed farther below, such as deletion of moderate values, or the presence of one or more outliers.

An error in computation.

Perhaps the surprisingly-high correlation coefficient is a fabrication.

Linear Transformations

Display 1-5 shows two graphs, each having the familiar r = 0.50 data in Display 1-2A. The only thing that has been changed is how we label the axes. Our point here is that relabelling axes changes neither the correlation coefficient nor any of our conclusions concerning regression.

In Display 1-5A, first of all, we suppose not only that the sons are more intelligent than the fathers (the sons' mean has now shot up to 16), but that they are more variable as well (the sons' range is now 16 compared to the fathers' range of 4). These two changes, however, affect none of our conclusions � the correlation coefficient continues to be 0.50, and regression can still be found. In the case of X = 14 and Yted = 20, for example, the prediction of 20 is less extreme (three sons scored higher) than the predictor of 14 (no father scored higher).

Display 1-5. The familiar r = 0.50 correlation is reproduced within both Graphs A and B, the only difference from previous graphs being how the axes are labelled, where label innovations are shown in green.

|

We qualify somewhat how we decide extremity. We decide a given score is more or less extreme on an axis not by looking at the units or the labels along that axis, but by looking at the given score's standing within its distribution � the fewer scores that exceed it, the more extreme it is.

No matter what the axis labels and units, we are always safe to expect that a predictor will be more extreme than the corresponding prediction. What we are not always safe to expect is that a high predictor will be higher than its corresponding prediction (or that a low predictor will be lower than its corresponding prediction). Thus, in Display 1-5A, the Xtor = 14 is more extreme than Yted = 20, but it is not higher. Xtor = 14 is more extreme because no father scored higher, and Yted = 20 is less extreme because three sons scored higher.

In the early Displays 1-2 and 1-3, we grew used to assuming that the value of a high predictor will be greater than the value of its corresponding prediction, but we were safe to do this only because X and Y had the same means and deviations about the means. When these conditions are not met, as in Display 1-5, we fall back on the safer and more general principle that the predictor will be more extreme within the distribution of predictor scores than is the prediction within the distribution of predicted scores.

Why, now, is this section titled "Linear Transformations"? Because the change of axis labels in Display 1-5 can be considered to be the result of multiplying the original labels by a constant, then adding another constant. For example, the Y axis in Display 1-5A can be viewed as the result of multiplying the X axis by 4, then adding -32; or Yted = 4Xtor - 32; which would be more conventionally, and succinctly, expressed as Y = 4X - 32. This method of transforming a dimension is referred to as a "linear transformation" because when the transformed units are plotted against the original units, they produce a straight line, as for example the blue regression line of Y = 4X - 32 in Display 1-5A.

We are able to express our conclusions both more succinctly and more precisely, then, by saying that a correlation coefficient is unaffected when one or both dimensions undergo a linear transformation. Another way of viewing a linear transformation is that it gives a dimension a new mean, or a new variance, or both, and that the correlation coefficient is insensitive to transformations of either the mean or the variance. Two qualifications, however, must be made. First, a transformation that involves multiplying by a negative value leaves the numerical value of a correlation coefficient unchanged, but reverses the sign. Second, a transformation that involves multiplying by zero leaves a variable that no longer varies, which results in an undefined correlation, a topic touched upon again in Chapter 9.

It is time now to test our understanding of linear transformations with a riddle. A researcher, we imagine, is investigating the relative contributions of heredity and environment to IQ. He takes one of his observations as suggesting a genetic component � the observation that mother-child IQ was 0.50. In an attempt to demonstrate the importance of environment, he subjects the children to an IQ-enrichment program, but is disappointed that when IQs are re-measured, the mother-child correlation continues to be 0.50. His conclusion: "Even two years of the most advanced IQ-enrichment program that we know how to provide" we imagine him writing "has failed to shake the iron grip of heredity on intelligence." In view of what we have just been discussing, is this researcher's conclusion justified?

Not at all. If, for example, the IQ-enrichment program had been wildly successful in that each child's IQ had increased 20% (multiply each IQ by 1.2), and also further increased by 20 points (then add 20), the children's IQs would have undergone a linear transformation which would not change the correlation of those IQs with any other variable, such as mother's IQ. Because the correlation coefficient is insensitive to changes in either the mean or the variance of its variables, even the astounding changes that we are imagining would leave the correlation coefficient unweakened. This researcher is performing the wrong analysis to answer the question he is asking, and in fact appears to be trying to conduct an experiment, which will be discussed in a separate volume.

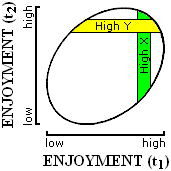

Autocorrelation and Lag Correlation

One way to get two variables for a scatterplot is to measure something twice with a fixed time interval between measurements, which produces an autocorrelation. Measuring children's IQs on one occasion, and then say two years later, would be an example. Display 1-5b shows an imaginary autocorrelation with a lag of ten years.

Another way to get variables is to measure two different things with a fixed time interval between measurements, which produces a lag correlation. Measuring people's IQs, then measuring their incomes two years later, would be an example.

In the case of autocorrelation, our usual finding is that the two scores are positively, but less than perfectly, correlated. Also, the greater the interval of time separating the two measurements, the lower the correlation is likely to be.

For example, when the closing price on the New York Stock Exchange of shares of Ford Motor Company are correlated with the same closing price the following day, r = 0.94; five days later, r = 0.69; ten days later, r = 0.45; and thirty days later, r = 0.12.

In the case of adult IQ, an immediate retest is likely to give a correlation of 0.90 with a fall of about 0.04 units per year, so that with a five-year interval, the correlation would be around 0.70 (Thorndike, 1933; Eysenck, 1953).

A similar principle holds in the case of lag correlation � the longer the interval between measurements, the more is the correlation likely to approach zero. Demonstrating that this effect can hold even over very short intervals, Stockford and Bissell (1949, p. 104) report that the rating given an item on a questionnaire was correlated 0.66 with the following item, 0.60 with the item after that, and so on until when five or more items intervened, was correlated 0.46.

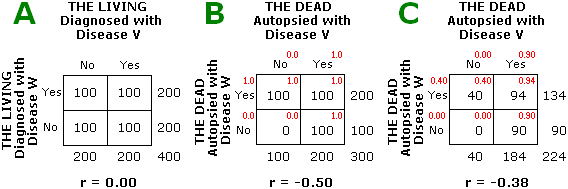

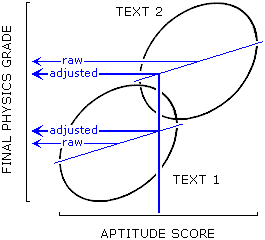

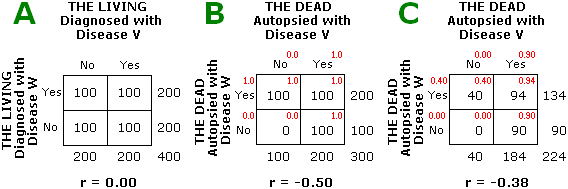

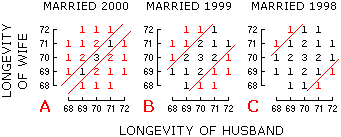

Gain Scores

In autocorrelations, if (in the phenomenon known as regression toward the mean) low initial scores rise and high initial scores fall, then gain must be negatively correlated with initial score.

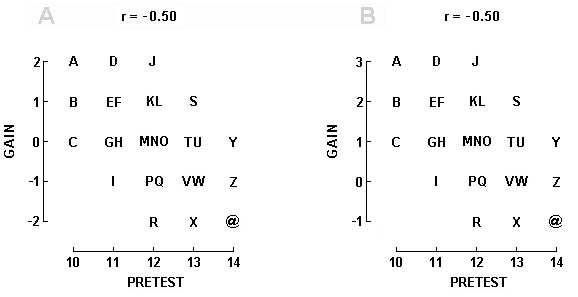

In Display 1-2A, for example, let us assume that the X axis is a person's IQ, and the Y axis is the same person's IQ twenty years later. To get the gain, we calculate posttest minus pretest. Thus, Person A starting with 10 and ending with 12 gained 12 - 10 = 2. When all the gain scores are plotted as in Display 1-6A, we find the expected negative correlation, which in this case happens to be -0.50.

What is important to recognize about Display 1-6A is that it conveys exactly the same information as Display 1-2A (except that we have changed the meaning of the axes). Thus, Display 1-6A conveys, underneath the surface, that the X and Y axis are positively, but less than perfectly, correlated, and that this necessitates some regression towards the mean, and that this regression towards the mean is particularly brought to attention by means of gain scores. Therefore, no further speculation as to what is happening is called for, or in fact is admissible, and any researcher who imagines from Figure 1-6A that he has discovered something other than the necessary working of every less-than-perfect correlation shows a lack of understanding of correlation and regression.

Display 1-6. Given a positive correlation between pretest and posttest, with data falling as it does in Display 1-2A, the correlation between pretest and gain scores will be negative as in Graph A. Graph B shows the same correlation, but now with a practice effect adding +1 to each score.

|

If, furthermore, there should be a practice effect from pretest to posttest � let us assume that previous exposure to the test permits all subjects to score one unit higher than they otherwise would, then the data would come out as in Display 1-6B � that is, the correlation would still be -0.50, but instead of low pretest scores rising and high pretest scores falling, we would now see low pretest scores rising (A, B, and C have a mean gain of 2.0) and high pretest scores not changing (Y, Z, and @ now have a mean gain of zero).

Precisely this pattern of results was found by Spielberger (1959) in his investigation of practice effects on the Miller Analogies Test (MAT), and had he recognized that practice along with regression completely accounted for his results, he might have felt it superfluous to speculate that "bright, psychologically sophisticated [subjects] would be most likely to profit from experience with a test such as the MAT, especially if their initial scores were depressed because they did not know what to expect on the test" (p. 261).

History

The basic techniques for analyzing correlational data were developed during the last two decades of the nineteenth century by Sir Francis Galton (1822-1911) and Karl Pearson (1857-1936). Before their contributions, scientists had no way of measuring the degree of association between two imperfectly-correlated variables, nor of generating optimal predictions from one variable to another with which it was imperfectly correlated.

Although we remember Galton here for his contributions to correlational theory, we note in passing that his career encompassed a colorful diversity of accomplishments, among them being explorations of Africa, the barometric weather map, and a system for classifying fingerprints that was adopted by Scotland Yard and eventually by the rest of the world. His work was guided by a passion for counting and measuring. One of his favorite maxims was, "Whenever you can, count" � a maxim he applied to such diverse phenomena as the rate of fidgeting among people attending a public meeting, and the attractiveness of women in different towns (which he recorded by means of a hidden device). Upon reading Charles Darwin's (his half-cousin's) Origin of Species in 1859, he concentrated his talents on questions of hereditary resemblance and evolution, for which work he has been accorded the title of "founder of the science of eugenics." The imaginary IQ data that we have been discussing in the present chapter, then, is precisely the sort of data that the techniques of correlation were initially developed to clarify.

Turning now to Galton's statistical contributions, we find that he pioneered the presentation of data in scatterplots, was the first to systematically discuss the phenomenon of regression toward the mean, to recognize that any scatterplot contained two regression lines and to plot them, and to note that these were frequently straight. To him, furthermore, we owe the introduction of the technical use of the word "correlation" as well as the introduction of r as a measure of the strength of an association. Initially, r stood for "reversion," was briefly abandoned in favor of w, and was finally reinstated, but this time standing for "regression."

This is not to say, however, that Galton brought matters to their contemporary state of development. His regression lines were fitted by eye rather than being computed mathematically, as they are today (see Appendices C and D), and his calculation of r, although giving much the same results as we would calculate today, was more primitive. That is, he proceeded by first transforming both sets of scores into deviation-from-the-mean scores, where for example the three raw scores 15, 16, 17 produce the three deviation-from-the-mean scores -1, 0, 1, and from which it can be seen that Galton's use of deviation scores had the effect of giving the means along the two dimensions the same mean of zero. Had Galton also known how to equate deviation, then his measuring the slope of the regression line would have given approximately the same correlation coefficient as modern computation gives today. The general rule is that when the standard deviations of the data on X and Y are equal (and at no other time), it so happens that the slope of the regression line (which, recalling high school geometry, equals rise over run) equals r (which the reader can verify for himself in Display 1-4 � although when predicting X from Y in the lower row of graphs, one must proceed as if the data had been replotted with the X values along the vertical axis and the Y values along the horizontal). Galton's r, then, was the slope of the regression line that he drew by eye after plotting his data as deviation scores.

To Karl Pearson, in turn, we owe the modern formulas for computing r, for which reason it is known today as "Pearson's r," and as well the modern formulas for computing the equations of the regression lines, as well as a host of elaborations and refinements which have contributed toward earning him the title of "founder of the science of statistics," but which fall beyond the scope of the present book.

From the Ideal to the Real

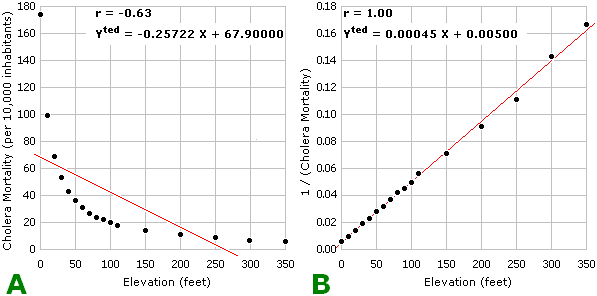

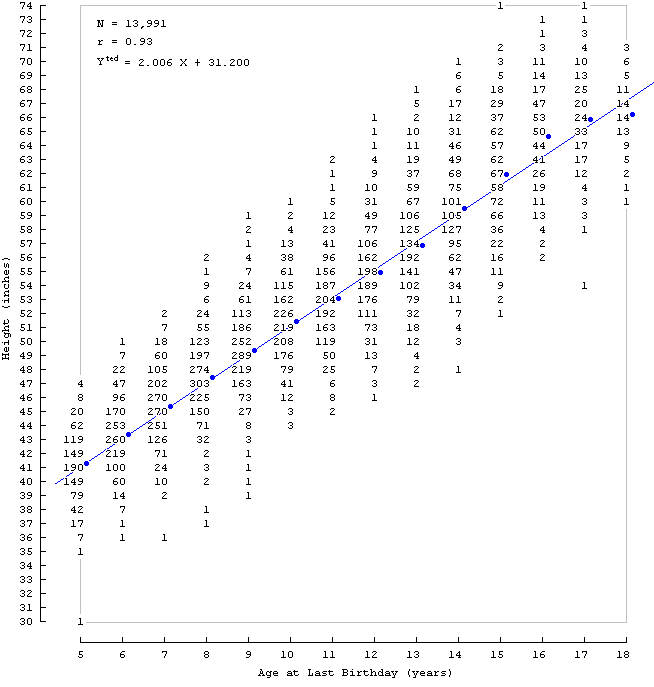

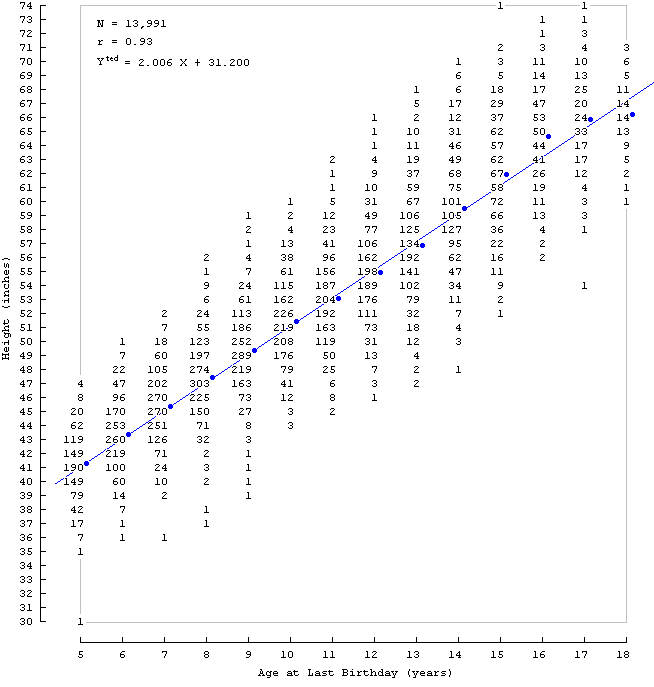

In the idealized data we have been considering so far, the regression line for predicting Y passes through the mean Ys in every column. In real data, however, this is rarely the case. Take Display 1-7, for example, which shows the relation between age and height for 13,991 Boston school boys, as presented by Bowditch (1877) in his Growth of Children.

Display 1-7. Bowditch (1877) scatterplot showing height of Boston school boys as a function of age. Blue dots show the mean height in each column, and the regression line of height on age is seen to pass close to, but not exactly through, each dot.

|

When we plot the mean Ys in the columns of Display 1-7 as blue dots, we find that they are not perfectly collinear. We are unable, therefore, to pass our regression line exactly through the mean Y at every X, and so are unable to fit the regression line accurately by inspection. We proceed, instead, by mathematically computing the equation for such a regression line, and then using the equation to draw the line in the graph, a technique explained in Appendices C and D. Having done this, we see that the correspondence between observed and theoretical values is close but not perfect.

One reason for this lack of correspondence is sampling error � the smaller the number of observations in each column, the less orderly are the column means. When we fit a regression line to such data, that line passes not through the observed means, but rather through where we expect the observed means would lie if the number of observations were increased. A second reason, however, is that the data may be non-linear, so that the discrepancy between observed an expected values would remain no matter how many observations were added. For example, perhaps there is a spurt in growth after the 16th birthday (with gains averaging more than two inches per year), and perhaps growth has begun to slow (with gains averaging less than two inches per year) after the 18th birthday.

We may note, incidentally, that these data have historical significance in that they represent one of the earliest known scatterplots. Their original presentation, of course, was without benefit of any measure of strength of association. Calculating this now, we find that r = 0.93. And here we find occasion for a second incidental observation � that a correlation numerically approaching the perfect correlation of 1.00 can correspond to a scatterplot containing considerable variation around the regression line. That 14-year-olds range in height from 48 to 70 inches, for example, may suggest a correlation lower than 0.93. We can avoid confusion on the matter as follows. If we visualize an ellipse enclosing the data in a scatterplot, the correlation will be higher the flatter the ellipse. By a flat ellipse is meant one that resembles not a circle, but begins to approach a line. In Display 1-7, then, a considerable range of heights at each age does not prevent the correlation from being very high because the range of ages represented still permits a flat ellipse.

Display 1-7 serves also to dramatize the chief use of descriptive statistics such as the correlation coefficient and the regression equation � which is to summarize. The raw data are too complex to remember and too unwieldy to communicate. Their chief features, however, can be condensed into the three lines in the graph showing N, r, and the regression equation.

The summary conveys the strength of the relationship, facilitates prediction from age to height, and by including sample size gives an idea of how dependable the summary is. It is a lot easier to remember and communicate, and by means of it certain details emerge with greater clarity, such as that over the age range considered, growth was almost precisely two inches per year (which we see in the coefficient of X being 2.006), and that the same linear trend could not have held all the way back to birth, because if it had, the average newborn would be 31.2 inches tall (which we see in the intercept of 31.200) � which impossibility leads to the inference that in earlier years, growth exceeds two inches per year.

Our summary statistics, however, do not tell us all there is to know. If, for example, we wanted to know the probability of a 13-year-old being taller than 65 inches (in 1877 Boston, anyway), the complete data in Display 1-7 can given us an answer � of 1460 13-year-olds, 28 were more than 65 inches tall, so that the probability is 28/1460 = 0.0192. The summary statistics considered so far, however, are incapable of giving us that answer. But the summary could be expanded � if to it we added the standard error of estimate, a concept beyond the scope of the present book, then we could answer the same question, although our answer might differ slightly from the one we just calculated. Thus, it is possible to expand our summary statistics to reflect more and more features of the data, and still be rewarded with immense gains in parsimony.

Although the information in the raw data will usually remain more detailed and more accurate, it will also usually be so much more cumbersome that summary statistics will be preferred.

Although the chief purpose of summary statistics is to make the presentation of raw data unnecessary, we will see over and over again below that summary statistics can misrepresent the raw data, in which case no gain in parsimony can justify their use. Before accepting summary statistics as faithfully reflecting raw data, therefore, a standard procedure should be to first examine the raw data for certain features which signal that the summary statistics are misleading. The development of the social sciences would be accelerated if raw data were included with every publication, or at least made publicly accessible in a central archive.

We shall find Display 1-7 useful in arriving at further conclusions below.

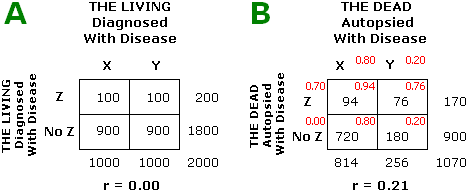

From the the Real to the Simple

|

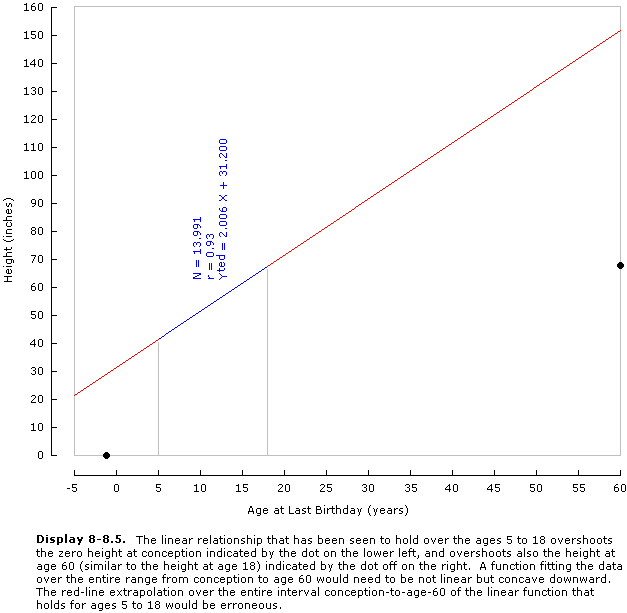

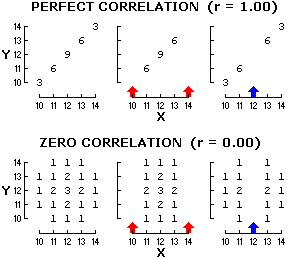

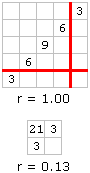

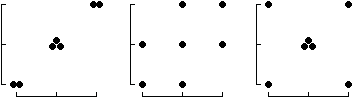

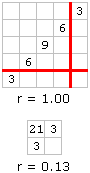

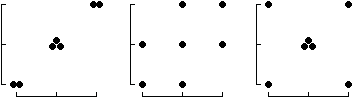

Display 1-8. Three ultra-simple scatterplots whose correlation coefficients are 1.00, 0.50, and 0.00.

|

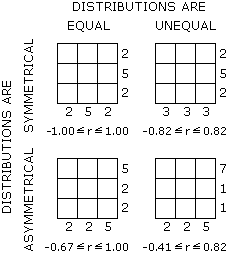

In explaining the principles of correlation and regression to others, it may occasionally be helpful to rely on simple scatterplots that are easily remembered and quickly drawn, as for example the three in Display 1-8, each of which contains only seven dots, with frequency distributions of 2, 3, 2 along both X and Y axes, and presenting correlations of 1.00, 0.50, and 0.00. Nothing more complicated than this is needed to demonstrate the major characteristics of correlation and regression, as for example that each scatterplot contains two regression lines, and that the angle between the regression lines goes from zero in the case of a perfect correlation to 90 degrees in the case of a zero correlation, and that regression toward the mean is bi-directional and does not imply falling variance and increases with the extremity of the predictor and in the presence of low correlations, or that when X and Y scores have the same variance, the correlation coefficient is equal to the slope of the regression line of Y on X. Redrawing the two positively-sloped scatterplots with a negative slope readily demonstrates the same phenomena among negative correlations. These several correlation and regression phenomena are impossible to explain without the help of graphs, and when utmost simplicity is called for, such graphs as these may suffice.

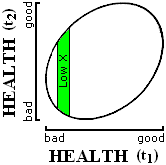

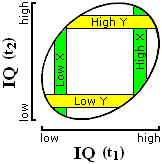

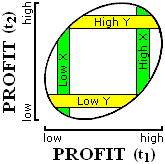

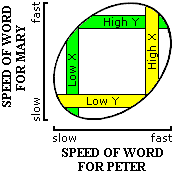

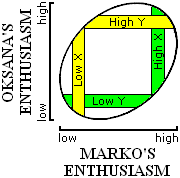

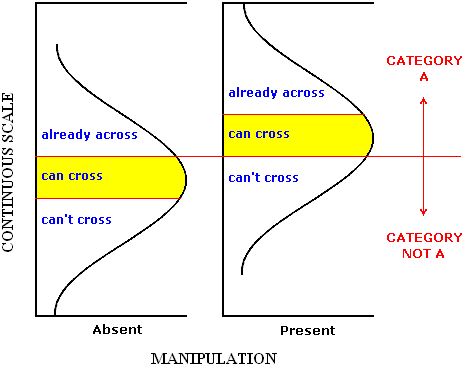

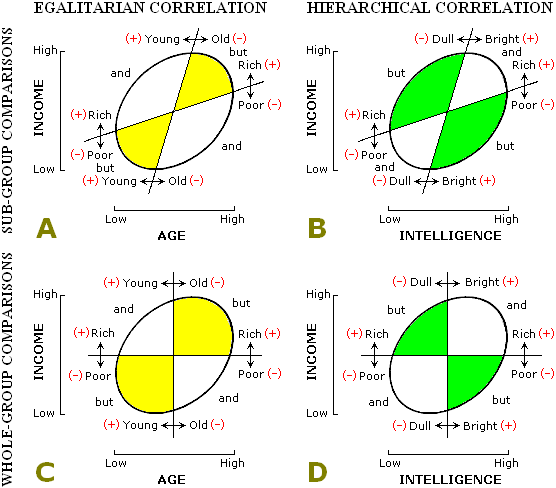

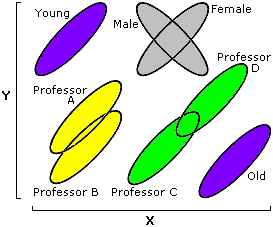

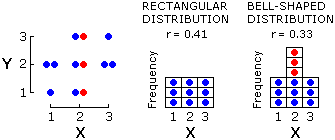

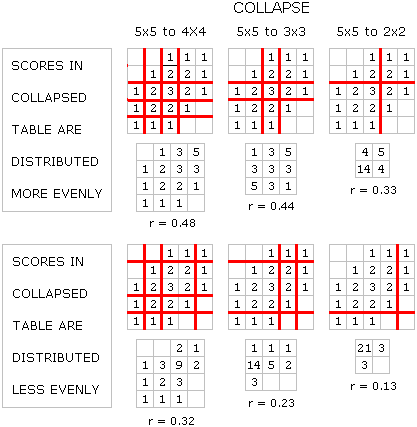

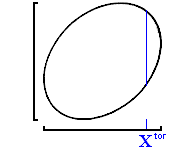

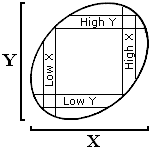

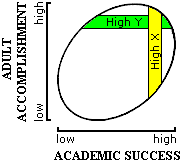

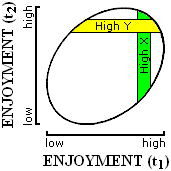

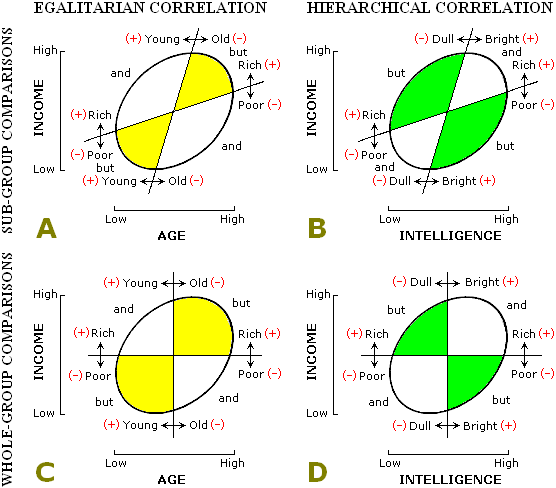

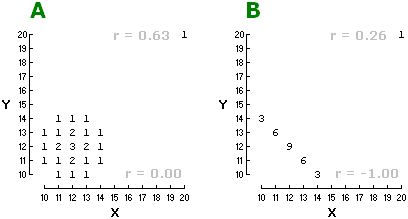

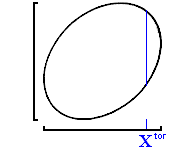

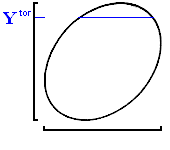

In the cases that we will be discussing below, we will usually not need to know exactly how many data points there are, or what their precise coordinates are, so that we will usually be neither able nor interested in drawing detailed scatterplots such as the ones we have been dealing with in the previous chapter. What we will know, however, will permit us to indicate the approximate shape these data points assume within the graph � when the correlation is positive but not perfect, for example, the data points lie roughly within a positively-sloped ellipse, such as any of the ones in Display 2-1. As this elliptical shape will be all we know about the data, and for our limited purposes, all we need to know, and as it is easier to sketch than a scatterplot which commits itself to specifying the position of every data point, we will rely on the ellipse graph heavily below, and so we must first learn to recognize the basic phenomena of correlation and regression in ellipse graphs.

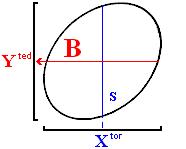

Let us begin, now, by finding regression toward the mean in an ellipse graph, first when predicting Y, and later when predicting X.

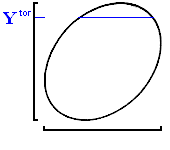

Predicting Y

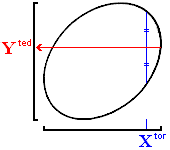

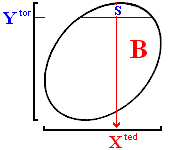

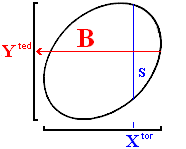

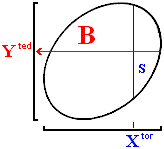

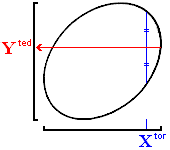

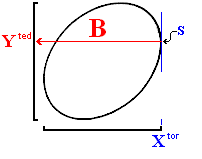

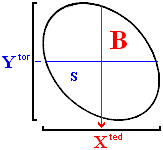

Starting Graph A in Display 2-1, we locate on the X axis the value of X (Xtor)from which we wish to generate a prediction, and moving straight up from it, we draw a vertical blue line inside the ellipse. Think of this vertical line not as a line, but as a column of data points all having the same X value, much like the column S-X in Display 1-2A, to which we restricted attention when the predictor was Father's IQ = 13. We recall, next, that the predicted Y (Yted) that we want is merely the mean Y of all the data points in the column having the given Xtor, and in our idealized data this has always been midway between the uppermost (or highest Y) and lowermost (or lowest Y) data points in a column. In Display 2-1, then, the Yted that we want is opposite the midpoint of the blue line we have just drawn inside the ellipse. Therefore, as shown in the Graph B, from the midpoint of that blue line we draw a red line straight out to the left � wherever we hit the Y axis is Yted.

|

Predicting Y

|

|

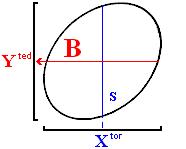

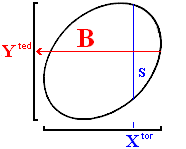

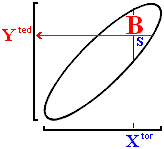

Predicting X

|

|

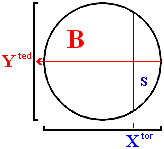

A: Draw a vertical blue line through the ellipse at predictor X (Xtor).

|

|

|

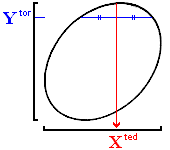

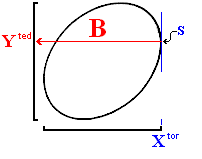

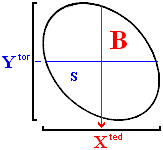

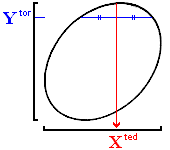

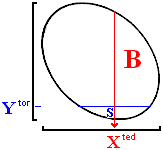

D: Draw a horizontal blue line through the ellipse at predictor Y (Ytor).

|

|

|

B: The predicted Y (Yted) for that Xtor will lie directly to the left of the midpoint of that blue line.

|

|

|

E: The predicted X (Xted) for that Ytor will lie directly below the midpoint of that blue line.

|

|

|

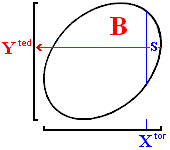

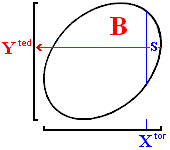

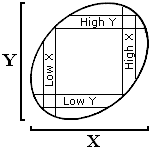

C: Regression toward the mean is evident in Xtor cutting off smaller area S, and Yted cutting off bigger area B.

|

|

|

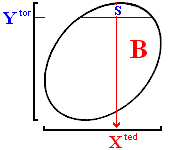

F: Regression toward the mean is evident in Ytor cutting off smaller area S, and Xted cutting off bigger area B.

|

|

|

Display 2-1. Demonstration of regression toward the mean in ellipse graphs which facilitate exposition by doing away with the labor of plotting individual data points. Note that area S (for Small) represents all scores that are more extreme than the predictor (blue line), and that area B (for Big) represents all scores more extreme than the prediction (red line).

|

Where, now, do we see regression? In Graph C, S (for "Small") is the area to the right of the blue Xtor, and B (for "Big") is the area above the red Yted. We see regression in area S being smaller than area B � the predictor cuts off a smaller area than the prediction. As area is proportional to the number of data points contained, the predictor is more extreme than the prediction, which is regression toward the mean. "Prediction" is here being used interchangeably with "predicted."

Predicting X

The manner of predicting X in ellipse graphs is so similar to the manner of predicting Y, that the reader will be able to follow the right-hand column in Display 2-1 without supplementary elaboration here.

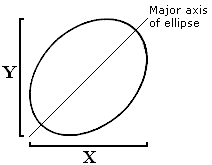

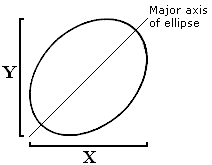

Regression Lines and the Major Axis

Display 2-2, Graph A shows an ellipse with both regression lines, and Graph B shows the same ellipse with its major axis. When a naive author wants to illustrate a regression line, he sometimes shows an ellipse together with its major axis, suggesting a weak understanding of everything that we have been discussing so far above. We see, however, that the major axis doesn't coincide with either regression line, but rather bisects the angle between the two regression lines. Only in the case of a perfect correlation do the two regression lines coincide with the major axis � but this is a case in which the ellipse is so flat that it forms a straight line.

A

|

B

|

|

Display 2-2. The two regression lines in Graph A are shown to be distinct from each other, and distinct from the major axis of the ellipse in Graph B.

|

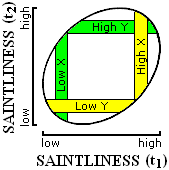

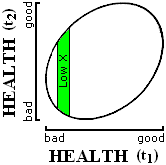

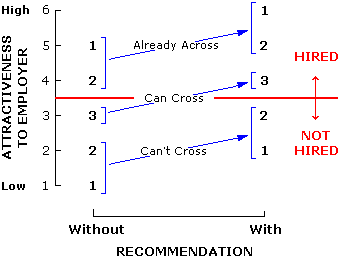

Regression Increases with the Extremity of the Predictor

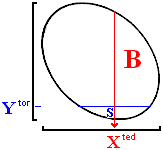

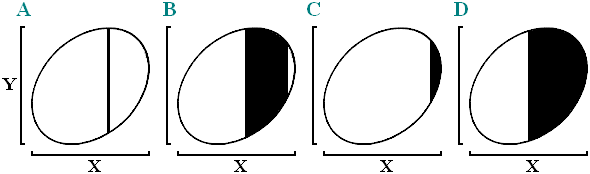

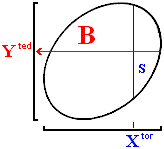

From left to right in Display 2-3, the Xtor, marked by a blue vertical line, moves from the mean to a tangent � which is to say, goes from totally non-extreme to maximally extreme. In each graph, the horizontal red line marking the prediction Yted is constructed so as to bisect the portion of the vertical blue predictor line that lies within the ellipse.

We observe that area S starts off being equal to area B in the left graph, but that as we move to the right and the extremity of the predictor increases, S shrinks more rapidly than B does � which is the same as saying that the more extreme the predictor, the greater the amount of regression.

A:

PREDICTOR IS

THE MEAN

|

B:

PREDICTOR IS

MODERATELY EXTREME

|

C:

PREDICTOR IS

MAXIMALLY EXTREME

|

B = S

NO REGRESSION

|

B > S

SOME REGRESSION

|

B >> S

MOST REGRESSION

|

|

Display 2-3. Ellipse-graph demonstration that regression towards the mean increases with the extremity of the predictor. Note that area S is always the area to the right of the blue Xtor line (except in Graph C where area S has been reduced to a single point on blue Xtor line, if we consider this blue Xtor line to be tangent to the ellipse); and area B is always the area above the red Yted line. As the predictor become more extreme, S shrinks rapidly, while B shrinks slowly, which is the same as saying that as the predictor becomes more extreme rapidly, the prediction becomes more extreme slowly, which is one of the corollaries of the phenomenon known as regression toward the mean.

|

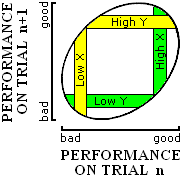

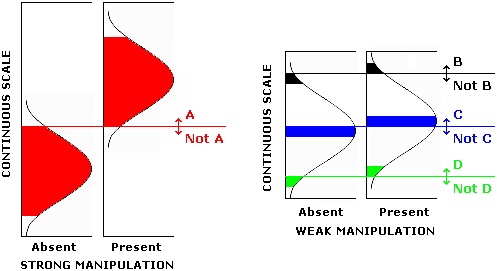

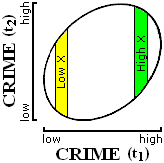

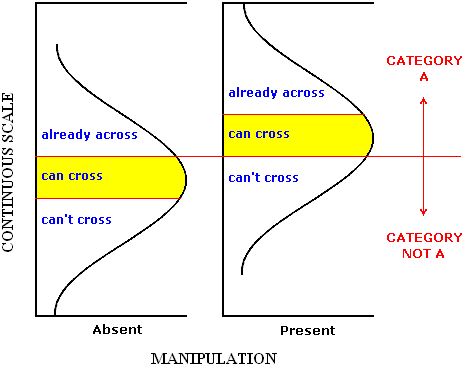

Regression Increases as the Correlation Weakens

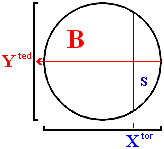

From left to right in Display 2-4, the extremity of the Xtor is kept constant while the correlation is lowered from very high to zero. As we have learned to expect, the blue Xtor line is bisected to locate the red Yted line.

In Graph A, we see that S approximately equals B (almost no regression). As we move to the right, the correlation weakens, and B increases in area relative to S � which is to say, regression increases as the correlation weakens.

A:

Correlation is

STRONG

|

B:

Correlation is

WEAKER

|

C:

Correlation is

ZERO

|

B = S

ALMOST NO REGRESSION

|

B > S

SOME REGRESSION

|

B >> S

COMPLETE REGRESSION

|

|

Display 2-4. Regression increases as the correlation weakens, demonstrated with ellipse graphs.

|

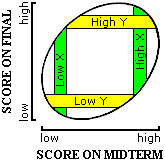

Practice Makes Perfect

You can, and should, learn to sketch out for yourself every imaginable ellipse demonstration of the basic phenomena of regression. To select a phenomenon to sketch, make these four choices:

demonstrate either regression increasing with the extremity of the predictor, or regression increasing as the correlation weakens;

when the correlation is either positive or negative;

when the predictor is either X or Y; and

when the predictor score is either greater than the mean or less.

With four binary choices, the total number of demonstrations is sixteen. Being able to sketch the graphs required for any of these sixteen demonstrations is an excellent test of whether you have grasped the concepts discussed above.

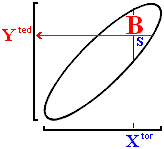

Sample problem. Here, for example, is a sample problem, with solution provided. Demonstrate regression increasing with the extremity of the predictor when the correlation is negative, the predictor is Y, and the predictor score is less than the mean.

The solution. The steps in any general solution are as below; the steps appropriate to the solution of the problem being solved here are italicized, and the resulting ellipse-graph solution appears in Display 2-5.

As two graphs are sufficient for any demonstration, draw axes for two graphs.

Draw an ellipse within each graph. But what ellipse?

When demonstrating the effect of extremity of the predictor, draw two identical ellipses, positively sloped if the correlation is positive, negatively-sloped if the correlation is negative.

When demonstrating the effect of weakening correlation, however, in the first graph draw a positively-sloped ellipse if the correlation is positive and a negatively-sloped ellipse if the correlation is negative, and in the second graph, draw a circle.

Within each ellipse, draw a predictor line to indicate the location of data points having the appropriate value of the predictor � a vertical line when the predictor is X, and a horizontal line when the predictor is Y.

When demonstrating the effect of extremity of the predictor, put the predictor line right through the middle of the ellipse in the first graph (zero extremity), and close to the tangent in the second graph (great extremity).

When demonstrating the effect of weakening correlation, however, put the predictor lines at a constant extremity.

Put vertical predictor lines to the right when the predictor is above the mean, and to the left when below; and put horizontal predictor lines high when the predictor is above the mean, and low when below. The area cut off by this predictor line is area S.

Finally, through the midpoint of the predictor line, draw a perpendicular line with an arrowhead on it to mark the prediction. The area cut off by this prediction line is area B.

The phenomenon of regression toward the mean is demonstrated by the ratio of S to B being close to one in the first graph, and approaching zero in the second.

A:

Predictor is

NOT EXTREME

|

B:

Predictor is

EXTREME

|

B = S

NO REGRESSION

|

B > S

MUCH REGRESSION

|

|

Display 2-5. Demonstration of regression increasing with the extremity of the predictor when the correlation is negative, the predictor is Y, and Ytor is less than the mean.

|

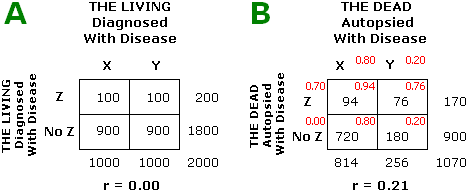

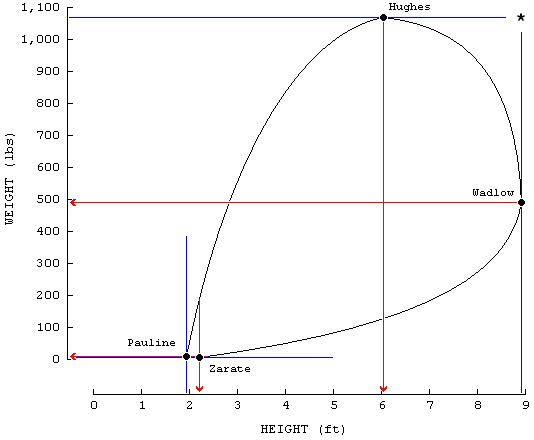

Record Holders

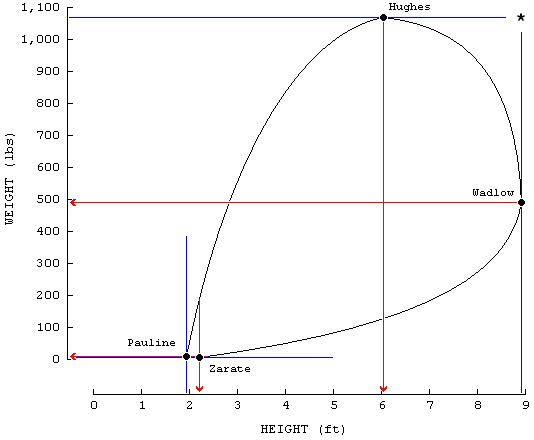

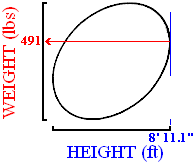

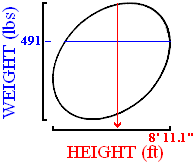

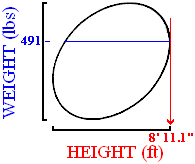

Robert Wadlow was the world's tallest person: 8 feet, 11.1 inches. Do we also expect that he was the heaviest? The question gives us some information on height, and asks us to infer something about weight. To answer the question, we must first decide what kind of correlation we are dealing with.

We can be certain, first of all, that taller people tend to be heavier � which is to say that the correlation between height and weight is positive. We can be certain, furthermore, that the correlation is less than perfect � at any given height, some people are heavier than others. A less than perfect correlation, finally, means that predicted scores will regress.

Now regression tells us that if we examine all the people who measured 8 fee, 11.1 inches, their mean weight will be less extreme than their height. As it happens, there is only one person at 8 feet, 11.1 inches, and so we predict that his weight will be less extreme than his height. No one has a better chance than he does of being heaviest, and yet his chances are slim. And it does turn out that Robert Wadlow's weight of 491 lbs is not as extreme as his height � he was the world's tallest man, but as the world record for weight is 1069 lbs, he is far from the heaviest.

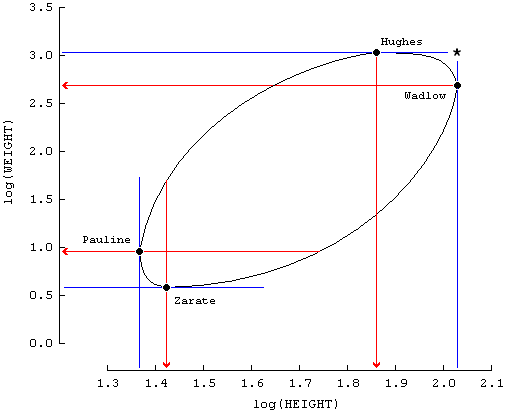

Display 2-6 shows the approximate relation between height and weight. The four world record holders for low and high height and weight serve to outline our ellipse � these four points mark the spots where vertical and horizontal tangents make contact with the ellipse. Note that none of the four blue tangents has an arrowhead on it � because these four lines are predictor lines, not predicted lines.

Display 2-6. Each of the four world-record holders lies on his own tangent (blue line). To demonstrate regression in any individual record holder, the predictor must be the world record (blue tangent). The data for the four record holders (McWhirter, 1977):

Tallest: Robert Wadlow, 8 ft, 11.1 in; 491 lbs.

Heaviest: Robert Earl Hughes, 6 ft, 1/2 in; 1069 lbs.

Shortest: Princess Pauline, 1 ft, 11.2 in; 9 lbs.

Lightest: Lucia Zarate, 2 ft, 2.5 in; 4.7 lbs.

|

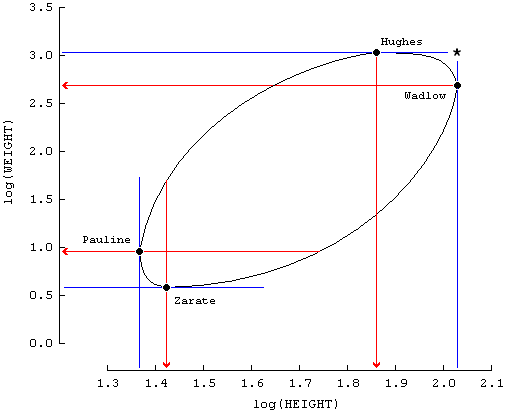

The "ellipse" that the data force us to outline in Display 2-6 is highly irregular, due mainly to short people not being able to vary as much in weight as tall people, so that our use of the word "ellipse" has lost its mathematical meaning, and is now being used to refer to any rounded shape. This irregularity, however, does not affect anything we have to say, and may in fact be largely corrected by plotting not weight against height, but rather the logarithm of weight against the logarithm of height, as is done in Display 2-7, and where something closer to the elliptical shape that we are more used to makes its reappearance. The effect of taking the logarithm of a variable is to magnify its small values. We shall have more to say concerning logarithmic transformations farther below.

Display 2-7. Plotting the logarithms of height (in inches) and weight (still in pounds) produces four data points that can be envisioned as falling on the periphery of an elliptical scatterplot of data, a feat of imagination quite impossible when the raw data were plotted in Display 2-6. The moral is that the simplest units in which it is possible to measure may need to be transformed in order to produce orderly data. The heights and weights in logarithmic units now become:

Tallest: Robert Wadlow, 2.030, 2.691.

Heaviest: Robert Earl Hughes, 1.860, 3.029.

Shortest: Princess Pauline, 1.365, 0.954.

Lightest: Lucia Zarate, 1.423, 0.672.

|

For each of the remaining three record holders, we find that by following our standard procedure for generating predictions, regression can be detected in every instance.

Generally, whenever we come across a record holder on any dimension, our best bet is that even though that person has the highest probability of holding the record on an imperfectly-correlated dimension, he is unlikely to hold the record on that second dimension. Our bet, of course, becomes safer the more weakly the two dimensions are correlated.

Viewed from a different perspective, this principle may be expressed as follows. For a single person to be both as tall as Wadlow and as heavy as Hughes, he would have to fall in the position indicated by an asterisk in Display 2-6. For anyone to fall in this position, however, is unlikely because it lies far outside the ellipse which encloses the data points. That is, if the data assume any rounded shape, which we are here calling "elliptical," and if the holder of a single record lies on a tangent to this ellipse, then it is not possible for him to also fall on a second tangent perpendicular to the first � and yet in order to hold a record on a second dimension, that person would have to lie on that second tangent as well. Thus, a record holder on one dimension is unlikely to be a record holder on a second dimension.

As we know that a given person does occasionally hold records on more than two dimensions, we should consider what circumstances sometimes do make the unlikely come to pass:

A multiple record holder is more probably the more highly correlated the dimensions are. When the correlation is perfect, in fact, the record holder on one dimension is necessarily the record holder on the other dimension as well.

A multiple record holder is more probably the smaller the number of data points under consideration. One data point by itself, to take the extreme case, holds four records � highest and lowest on X, as well as highest and lowest on Y. To take the next most extreme case, with only two data points, each one holds two records � whichever is highest on X, for example, must also be either highest or lowest on Y. With three data points, as least one is guaranteed to be a multiple record holder. With the number of data points just above three, a multiple record is not longer guaranteed, but is still highly likely. As the number of data points climbs still further, however, the probability of a multiple record holder falls. When the number of data points reaches millions or billions, multiple record holders become almost non-existent.

Even with a large number of data points and a weak correlation, a multiple record holder can still occur. This is because the ellipse indicating the distribution of data points is meant to indicate not the boundary beyond which no data point may ever fall, but only the boundary beyond which data points become rare � so if the rare data point does stray outside the ellipse and does set records on both dimensions, we will consider that as a rare deviation from a usually dependable generalization.

How not to check up on regression

What is to stop someone from pointing out that Robert Wadlow weighs 491 lbs (which is extreme, but not a record), and then predicting that on height, he will be even less extreme. Then, when Robert Wadlow's height is discovered to be more extreme, why not say that regression is disconfirmed?

This seeming disconfirmation of regression is fallacious because it relies on an illegitimate test of regression. Looking back at Display 1-2A, we see that when X = 10, the regression to Yted = 11 is not replicated in every data point. There exists, in addition to point B whose Y = 11 has "regressed" correctly, point C whose Y = 10 has not "regressed" at all, as well as point A whose Y = 12 has "regressed" too far � has shot one unit past mean Y = 11. I am putting "regressed" in quotation marks because the word is not meant to be applied to individual data points in a column � it is meant to be applied to the mean Y of all the data points in a column (and, when predicting Y, regression applies to the mean of all the data points in a row.) The Yted in the X = 10 column, then, does regress; the individual data points in that column do various things.

Our conclusion, then, is that the only valid check on regression is one which looks at all the data having the value of the predictor: all the data in a given column when predicting Y, or all the data in a given row when predicting S. Selecting one data point out of the many available at a given value of the predictor, and saying that it disconfirms regression, makes the error of excluding from consideration relevant data.